How To Benchmark Solana RPCs For Real Trading Performance

The most developers measure Solana RPC performance wrong. This post outlines a practical framework for testing what actually matters. Slot freshness, tail latency, and transaction landing rates, so you can choose an infrastructure setup that improves execution quality, not just dashboard metrics.

TLDR: The most developers measure Solana RPC performance wrong. This post outlines a practical framework for testing what actually matters. Slot freshness, tail latency, and transaction landing rates, so you can choose an infrastructure setup that improves execution quality, not just dashboard metrics.

1. The Trade That Never Landed

You’ve built the perfect bot. The logic is sound, the entry signal is clear, and your local latency is low. You spot a mispricing on Raydium. Your bot fires the transaction.

On your dashboard, the request returns a 200 OK in 80ms. You think you won.

But 30 seconds later, you check the explorer: “Blockhash not found” or “Slippage Exceeded.”

Someone else got the trade.

Why? Because while your RPC responded in 80ms, the data it served you was already 2–3 slots old, roughly a full second behind the tip of the chain. You were trading on a ghost of the market. This is the silent killer of Solana trading strategies: Slot Lag.

2. The “Ping” Trap

When most teams benchmark RPCs, they run a simple script that pings getHealth or getVersion and averages the response time.

- Provider A: 45ms average

- Provider B: 120ms average

They pick Provider A. This is a mistake.

In Solana’s high-throughput environment, HTTP latency (how fast the server replies) is distinct from data freshness(how close the server’s state is to the tip of the network).

A heavily cached, overloaded RPC node can reply instantly — with stale data. If you’re 2–3 slots behind the tip, you’re effectively 800–1,200ms in the past. In a block-building war, you are dead on arrival.

The real issue isn’t speed. It’s synchronization.

3. A Real-World Benchmarking Framework

To truly grade an RPC, you need to ignore vanity metrics and test for execution reliability. Here is the 5-step framework we use at Carbium.

Before you start: Geo-pin first. If your bot runs in Tokyo but your RPC routes to Virginia, you’re adding ~150ms of physics penalty before any of these tests even matter. Verify your provider offers geo-located endpoints and pin to the correct region before running any benchmarks.

A. Slot Freshness — The “Lag” Metric

This is the single most important metric for traders. You need to measure the delta between your RPC’s reported slot and the actual cluster tip.

How to test: Run a script that polls getSlot from your target RPC and compares it against a known “truth” source — ideally a direct validator connection, or at minimum two paid providers side-by-side. Even the Solana Foundation public endpoint can lag, so never rely on a single reference.

Target: Zero lag. A variance of ±1 slot is acceptable occasionally, but consistent lag of 2+ slots means the node is overloaded or poorly peered.

B. Transaction Landing Rate — The “Win” Metric

Sending a transaction is easy. Landing it on-chain is hard.

How to test:

- Generate 100 simple memo transactions.

- Send them during a period of high network congestion (typically ~14:00–18:00 UTC).

- Measure how many land within 3 slots (~1.2 seconds).

- Measure how many are dropped entirely.

Why it matters: Many RPCs have poor propagation logic. They accept your transaction but fail to forward it to the current leader validator in time. Your sendTransaction call succeeds, but the transaction never makes it on-chain.

C. Tail Latency — P99, Not Average

Averages lie. If your average latency is 50ms but your tail latency (P99 — the slowest 1% of requests) is 2 seconds, you will miss every critical volatility event. The worst 1% is exactly when the market is moving fastest.

How to test: Send 1,000 requests over 5 minutes. Plot the full distribution, not just the mean.

Look for: A tight, flat curve. Spikes in the tail indicate the provider is throttling you or hitting garbage collection pauses on shared infrastructure.

D. The “Heavy” Call Test

Not all RPC calls are equal. A getSlot request is tiny and easy to cache. A getProgramAccounts or getSignaturesForAddress request puts significant load on the node’s CPU, memory, and disk I/O.

This is where infrastructure architecture matters. Many generic cloud-hosted RPCs run on shared virtual instances with limited IOPS. When you hit them with a heavy call, they choke, time out, or return partial data. Bare-metal providers — running dedicated hardware with NVMe storage and no noisy neighbors — handle these calls at a fundamentally different level.

How to test: Run a complex getProgramAccounts query with filters. If it times out or takes >5 seconds while a bare-metal provider returns the same query in <500ms, that provider can’t scale with your trading volume.

E. Geographic Consistency

Solana is global. If your bot runs in Tokyo but the RPC routes you to a node in Virginia, you’re adding ~150ms of speed-of-light penalty before any processing even begins.

How to verify your actual routing:

# Check which node you're actually hitting

curl -s -w "\\nDNS: %{time_namelookup}s | Connect: %{time_connect}s | Total: %{time_total}s\\n" \\

-X POST <https://api.carbium.io/v1/><YOUR_KEY> \\

-H "Content-Type: application/json" \\

-d '{"jsonrpc":"2.0","id":1,"method":"getSlot"}'

Run this from the same machine as your bot. If time_connect is >80ms, you are not hitting a geographically close node — ask your provider about regional endpoints or dedicated routing.

Check: Does the provider offer geo-located endpoints? Can you pin a connection to us-east, eu-central, or asia-northeast?

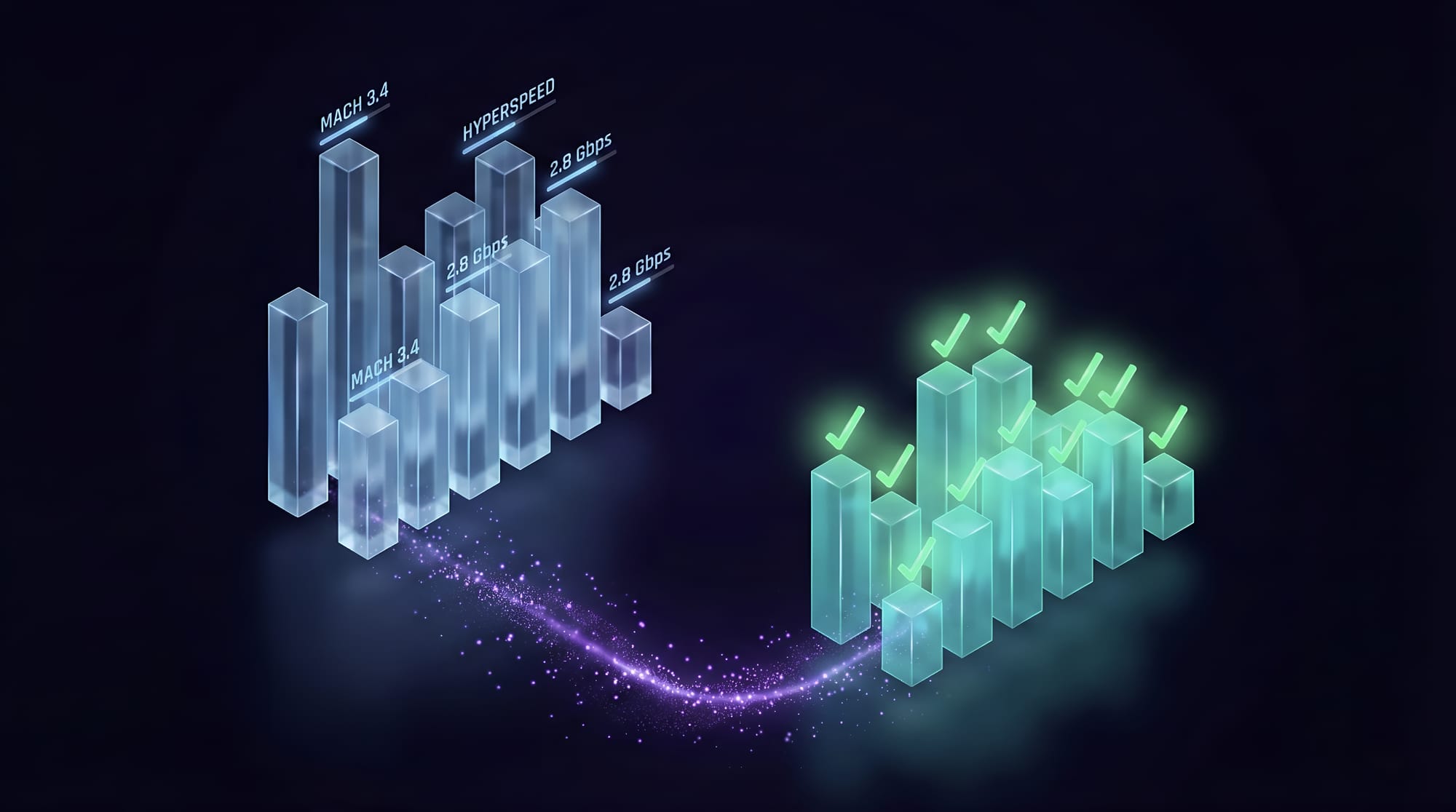

At a Glance: What to Expect

Run all five tests and compare your current provider against a bare-metal alternative. Here’s what the numbers typically look like:

| Metric | Shared Cloud RPC | Bare-Metal (e.g. Carbium) |

|---|---|---|

| Slot lag (typical) | 2–5 slots | <1 slot |

| Slot lag (congestion) | 5–15 slots | 1–2 slots |

| P50 latency | 40–80ms | 20–50ms |

| P99 tail latency | 800ms–3s | <150ms |

| Landing rate (off-peak) | 85–95% | 97–99% |

| Landing rate (congestion) | 50–75% | 95–98% |

getProgramAccounts (heavy) | 2–10s / timeout | 200–600ms |

Numbers based on comparative testing during Q1 2026 peak congestion windows. Run your own tests, your mileage will vary by region, call type, and time of day.

4. How Carbium Approaches This

At Carbium, we built our infrastructure specifically because standard RPCs are designed for generic web apps, not trading. A wallet checking a balance doesn’t care if the data is 400ms old. A liquidation bot does.

Here’s how we address each metric in the framework above:

Smart Routing: We don’t send your request to a random server. We route it to the node with the lowest slot lag relative to the current block leader. The result: consistent sub-1 slot freshness, not just fast HTTP responses.

Bare-Metal Infrastructure: Our nodes run on dedicated bare-metal servers with NVMe storage, not shared cloud VMs. This is why heavy calls like getProgramAccounts return in hundreds of milliseconds instead of timing out. No noisy neighbors, no throttling.

Transparency: We don’t hide behind averages. Our dashboard shows real-time slot lag and landing metrics, not just HTTP response times. We built the benchmarking framework above because we want you to verify our claims, not just trust them.

During peak congestion windows, Carbium endpoints consistently maintain 97%+ transaction landing rates with sub-50ms median latency and sub-1 slot freshness. Run the tests yourself, the numbers are in the table above.

Abstract visual video on Solana RPC succeeding on commands

5. Start Benchmarking Today

Don’t take our word for it. Run the tests.

Use the scripts below, point your bot at our endpoint, and compare your landing rates side-by-side with whatever you’re running now.

- Stop guessing. Measure your slot lag.

- Stop failing. Improve your landing rate.

- Start trading.

Ready to upgrade your execution? Start for free.

Appendix: Benchmarking Scripts

Node.js (recommended)

No dependencies beyond the standard @solana/web3.js you already have.

// Solana RPC Slot Freshness & Latency Benchmark

// Run: npx ts-node rpc-benchmark.ts (or compile with tsc)

// Requires: npm install @solana/web3.js

import { Connection } from "@solana/web3.js";

const endpoints: Record<string, string> = {

"Public/Free": "<https://api.mainnet-beta.solana.com>",

Carbium: "<https://api.carbium.io/v1/><YOUR_API_KEY>",

};

const NUM_SAMPLES = 100;

const DELAY_MS = 100;

async function benchmark(name: string, url: string) {

const conn = new Connection(url, "confirmed");

const latencies: number[] = [];

for (let i = 0; i < NUM_SAMPLES; i++) {

const start = performance.now();

await conn.getSlot();

latencies.push(performance.now() - start);

await new Promise((r) => setTimeout(r, DELAY_MS));

}

latencies.sort((a, b) => a - b);

const avg = latencies.reduce((a, b) => a + b, 0) / latencies.length;

const p50 = latencies[Math.floor(latencies.length * 0.5)];

const p99 = latencies[Math.floor(latencies.length * 0.99)];

console.log(`\\n${name}`);

console.log(` avg:${avg.toFixed(1)}ms`);

console.log(` p50:${p50.toFixed(1)}ms`);

console.log(` p99 (tail latency):${p99.toFixed(1)}ms ← watch this one`);

}

async function slotFreshnessCheck() {

console.log("\\n--- Slot Freshness ---");

const connections = Object.entries(endpoints).map(([name, url]) => ({

name,

conn: new Connection(url, "confirmed"),

}));

for (let i = 0; i < 5; i++) {

const results = await Promise.all(

connections.map(async ({ name, conn }) => ({

name,

slot: await conn.getSlot(),

}))

);

const maxSlot = Math.max(...results.map((r) => r.slot));

results.forEach(({ name, slot }) => {

const lag = maxSlot - slot;

const flag = lag >= 2 ? " ⚠ STALE" : lag === 0 ? " ✓" : "";

console.log(`${name}: slot${slot} (lag:${lag})${flag}`);

});

console.log("");

await new Promise((r) => setTimeout(r, 1000));

}

}

(async () => {

console.log(`Sampling${NUM_SAMPLES} requests per endpoint...\\n`);

for (const [name, url] of Object.entries(endpoints)) {

await benchmark(name, url);

}

await slotFreshnessCheck();

console.log("\\n⚠ Run during peak hours (14:00–18:00 UTC) for meaningful results.");

})();

Python (alternative)

# Solana RPC Slot Freshness Benchmark

# Requires: pip install httpx

import asyncio, time, httpx, statistics

endpoints = {

"Public/Free": "<https://api.mainnet-beta.solana.com>",

"Carbium": "<https://api.carbium.io/v1/><YOUR_API_KEY>",

}

NUM_SAMPLES = 100

DELAY_BETWEEN = 0.1 # seconds

async def benchmark(name: str, url: str):

latencies = []

async with httpx.AsyncClient(timeout=10) as client:

for _ in range(NUM_SAMPLES):

payload = {"jsonrpc": "2.0", "id": 1, "method": "getSlot"}

start = time.perf_counter()

r = await client.post(url, json=payload)

latencies.append((time.perf_counter() - start) * 1000)

await asyncio.sleep(DELAY_BETWEEN)

latencies.sort()

p99 = latencies[int(len(latencies) * 0.99)]

print(f"\\n{name}")

print(f" avg:{statistics.mean(latencies):.1f}ms")

print(f" p50:{latencies[len(latencies)//2]:.1f}ms")

print(f" p99 (tail latency):{p99:.1f}ms ← watch this one")

async def main():

print(f"Sampling{NUM_SAMPLES} requests per endpoint...\\n")

for name, url in endpoints.items():

await benchmark(name, url)

print("\\n⚠ Run during peak hours (14:00–18:00 UTC) for meaningful results.")

asyncio.run(main())

Tip: Run both scripts during peak congestion hours (~14:00–18:00 UTC) or during high-demand event like new token or mint. Off-peak results can make any provider look good. The tail latency (P99) number is the one that matters most, that’s what kills your bot during the moments you need it most.