The CQ1 Engine Architecture

Deliver sub-millisecond quotes on Solana with binary state management and real-time data ingestion.

Sub-millisecond quotes on Solana with binary state management and real-time data ingestion. Fundamental re-architecture of DEX aggregation.

Rather than optimizing existing patterns, CQ1 introduces massively parallel pathfinding and binary-native state management applied to on-chain liquidity routing.

1. System Architecture

CQ1 operates on a three-layer architecture that cleanly separates concerns: an API layer for request handling, a core router for computation, and a data ingestion layer for real-time state synchronization.

The three layers:

- API Layer: Express gateway and quote controller handling incoming requests

- Core Router: Parallel path engine, math core, and DEX adapters (Raydium, Orca, Meteora)

- Data Ingestion: gRPC listener for real-time updates, weight scheduler for periodic sync

State Layer:

- Redis: Binary buffers for hot state (sub-millisecond reads)

- MongoDB: Graph topology for structural data

The critical insight here is the decoupling of read and write paths. Quote generation (the read path) never blocks on state updates (the write path). The API reads from pre-computed binary buffers while background workers continuously synchronize state from the chain.

The Preview of https://app.carbium.io pathfinding

2. The Parallel Router: Massively Concurrent Pathfinding

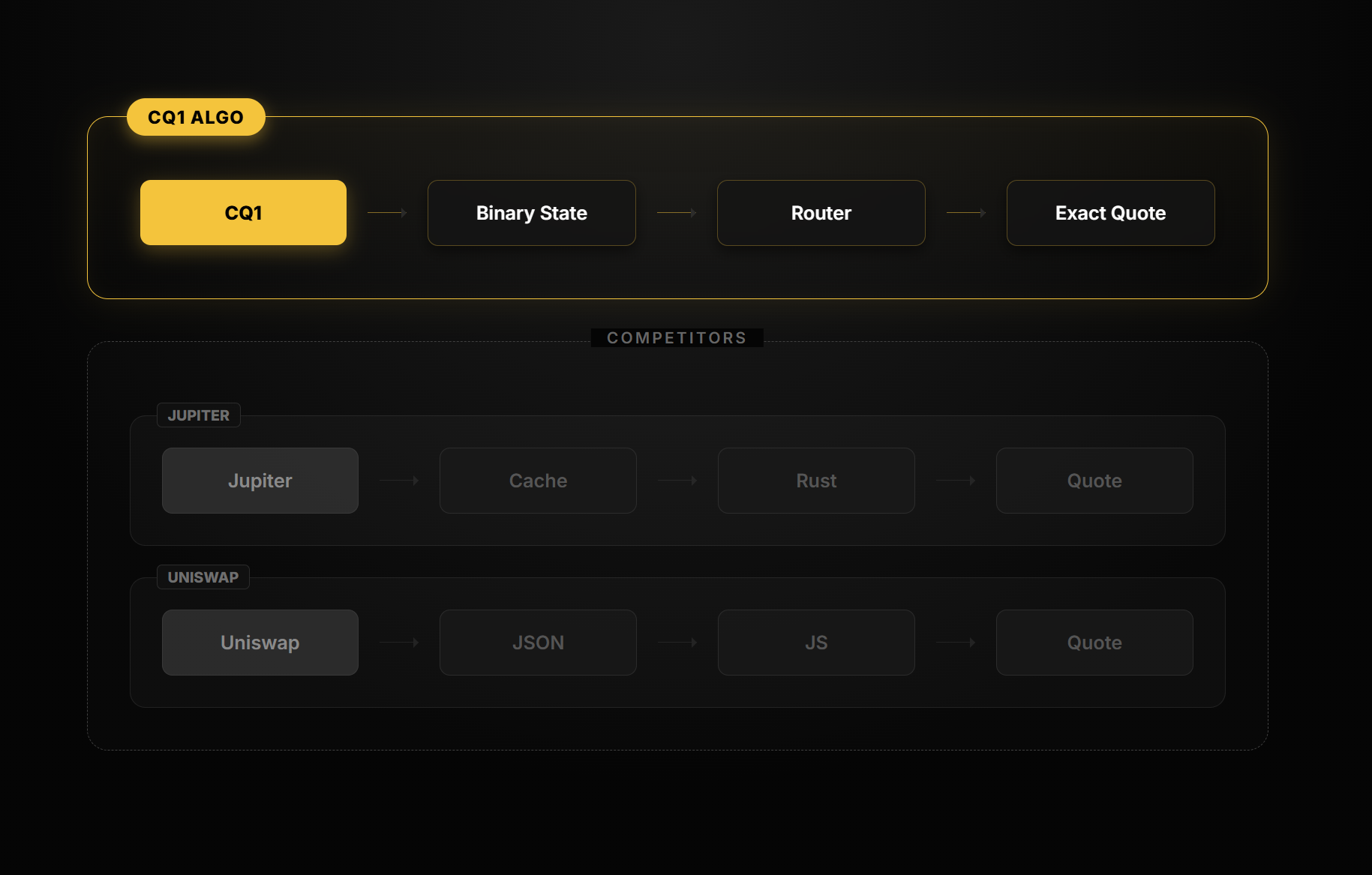

Traditional DEX aggregators route trades using algorithms that evaluate paths sequentially or with limited threading. CQ1 takes a fundamentally different approach, massively parallel evaluation of the entire solution space.

The Routing Problem

Finding the optimal route across dozens of liquidity pools with multi-hop paths is a graph search problem. For a trade like SOL → USDC, the router must evaluate direct paths, two-hop paths (SOL → BONK → USDC), and potentially three-hop paths, each with different pool types (AMM, CLMM, Stable) and varying liquidity depths.

Traditional routers evaluate these paths in hundreds or thousands. The CQ1 engine evaluates them in millions, simultaneously.

The parallel router processes:

- Direct paths across all viable pools

- 2-hop routes via major token hubs

- 2-hop routes via stablecoins

- N-hop combinations up to configured depth

All evaluated concurrently, returning the optimal route with split allocation.

Waterfill Split Algorithm

For larger trades, executing through a single path creates excessive slippage. The CQ1 router implements a waterfill algorithm that treats liquidity pools as containers with different depths. It "fills" pools in order of price efficiency, splitting the trade across multiple routes to minimize total slippage.

Practical example: A 10,000 USDC trade might split as: 60% through Raydium CLMM (deepest liquidity), 25% through Orca Whirlpool, 15% through a Meteora stable pool. The router evaluates all viable splits in parallel and returns the combination with the highest output.

3. Binary State Management

The second innovation is how CQ1 stores and retrieves pool state. Most aggregators store pool data as JSON objects or cached structs that require serialization/deserialization on every read.

CQ1 stores pool state as raw binary buffers in Redis — the exact byte layout used on-chain. When the Math Core needs to calculate output amounts, it reads the buffer directly into memory without parsing.

Traditional approach: JSON String → Parse → Object → Compute

CQ1 approach: Binary Buffer → Compute

Comparison

| Approach | Storage Format | Read Overhead | Data Freshness |

|---|---|---|---|

| JSON/REST Polling | Text (JSON) | Parse + deserialize | ~200-500ms stale |

| Cached Objects | Serialized structs | Deserialize | ~50-100ms stale |

| CQ1 Binary State | Raw bytes | Zero-copy read | Real-time (push) |

4. Real-Time Data Ingestion

Binary buffers are only useful if they reflect current on-chain state. CQ1 uses a dual-strategy ingestion system that ensures the quote engine always operates on fresh data.

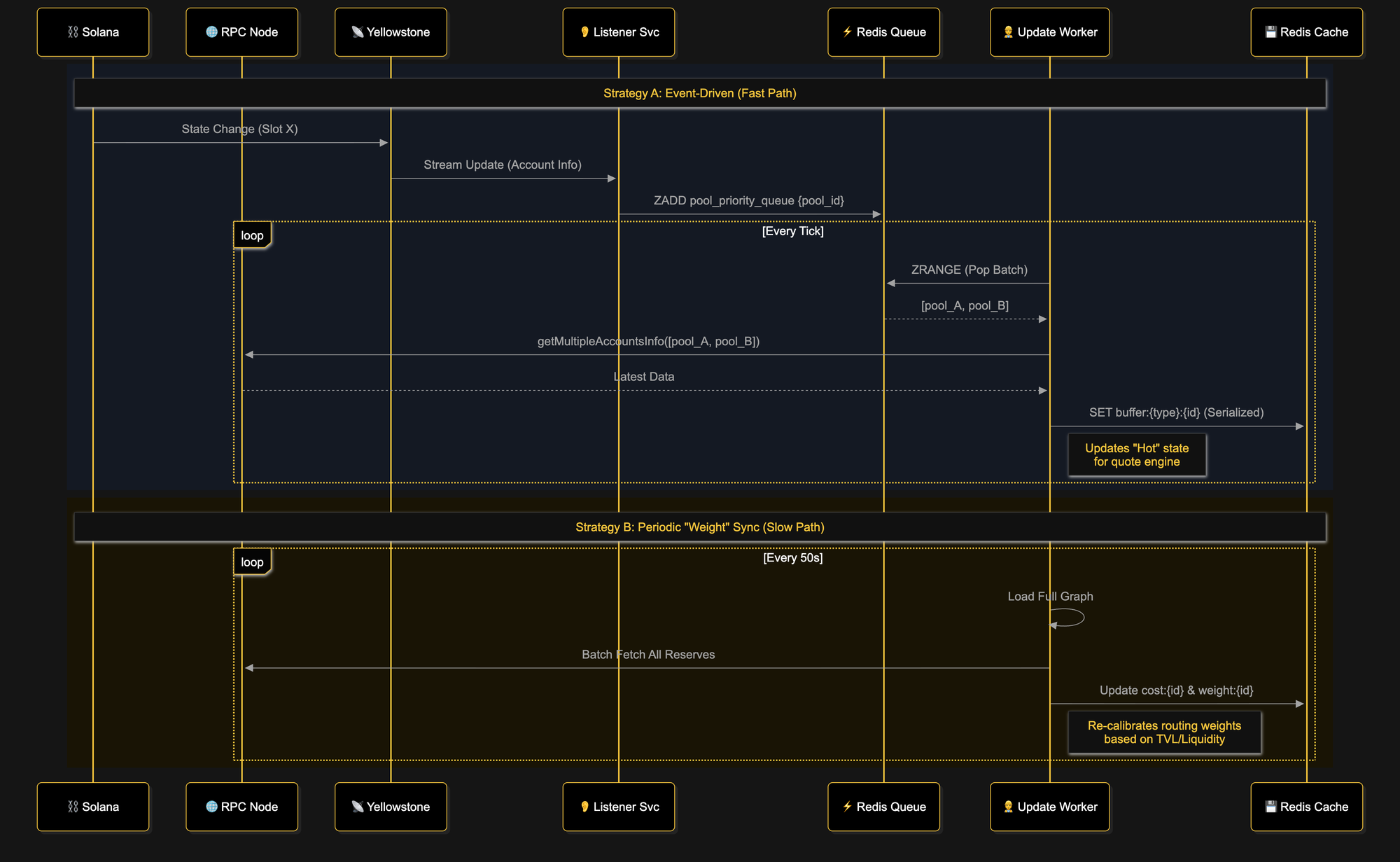

Strategy A: Event-Driven (Fast Path)

Rather than polling RPC nodes ("What's the current state?"), CQ1 subscribes to validator streams via Yellowstone gRPC. The moment an account changes on-chain, the update pushes to our listener service.

The flow:

- State change occurs on Solana (Slot X)

- Yellowstone gRPC streams the account update to our Listener Service

- Listener adds the pool to a priority queue in Redis (

ZADD pool_priority_queue) - Update Worker continuously pops batches from the queue (

ZRANGE) - Worker fetches latest data via

getMultipleAccountsInfo - Binary buffer written to cache (

SET buffer:{type}:{id})

This "hot" state powers the quote engine with ~10ms end-to-end latency from on-chain change to queryable state.

Strategy B: Periodic Weight Sync (Slow Path)

Every ~50 seconds, a background process recalibrates the global routing graph:

- Load full graph topology

- Batch fetch all pool reserves from RPC

- Update routing costs and weights in cache (

cost:{id},weight:{id})

This ensures the GPU router's heuristics stay aligned with actual TVL and liquidity distribution across all pools — not just the actively-traded ones.

5. Technical Architecture Details

Hybrid State Management

The system maintains two distinct data paths optimized for their access patterns:

Hot Path (Redis Buffers)

Stores raw binary buffers (Buffer) directly in Redis. This approach minimizes serialization overhead and matches on-chain data structures exactly. When the Math Core needs pool state, it reads bytes directl without parsing and object instantiation.

Cold Path (MongoDB + In-Memory Graph)

Structural data (pool metadata, token relationships, graph edges) persists in MongoDB but loads into an in-memory Map (poolsCache) at startup. This provides O(1) lookups during routing without hitting the database on every request.

Offloaded Pathfinding

Complex multi-hop routing runs on a specialized compute service (Port 8000). The TypeScript API layer handles:

- Request validation and rate limiting

- Orchestration of the quote flow

- Final response assembly

The routing engine handles:

- Parallel path enumeration

- Waterfill-split optimization

- Route scoring and selection

This separation keeps the API responsive while the heavy computation runs on dedicated infrastructure.

Precision Math

All swap calculations use:

- BN (BigNumber) for arbitrary precision integer math

- Exact curve implementations matching on-chain programs:

- Constant Product (x * y = k) for standard AMMs

- Concentrated Liquidity with tick traversal for CLMM pools

- Stable Swap invariant for pegged asset pools

The goal: quotes match on-chain execution results exactly. No estimates, no approximations, no "slippage exceeded" surprises.

6. Smart Water-Fill Execution Flow

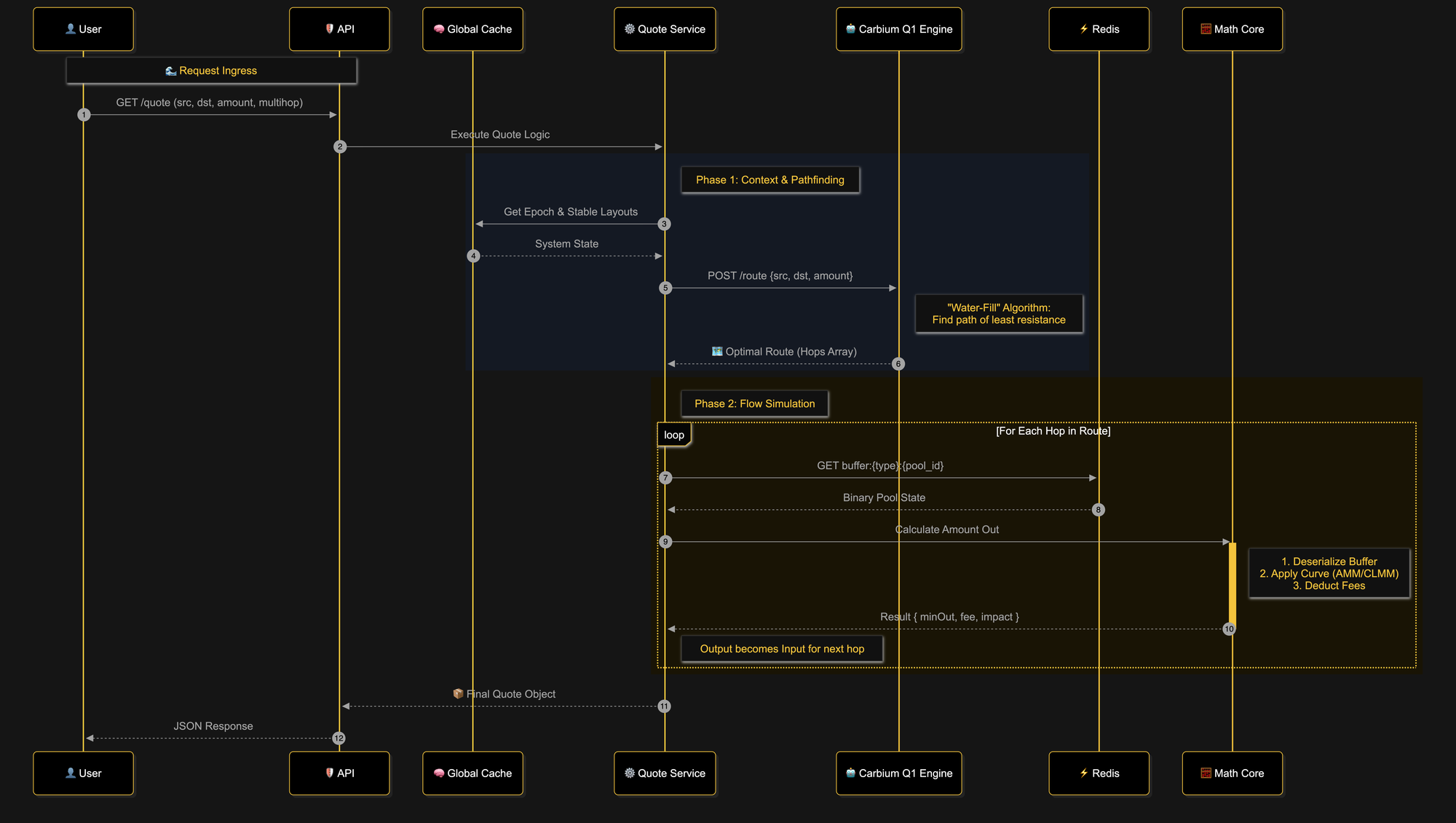

Here's the complete quote generation flow, from user request to final output:

Phase 1: Pathfinding

- User sends GET /quote (source token, destination token, amount)

- API forwards to Quote Service

- Quote Service fetches epoch and token layouts from global cache

- Request sent to Routing Engine for path optimization

- Engine performs parallel evaluation with waterfill optimization

- Returns optimal route with hop sequence and split allocations

Phase 2: Precise Simulation

- For each hop in the route:

- Fetch binary pool state from Redis

- Math Core calculates exact output using on-chain curve formulas (AMM/CLMM/Stable)

- Output becomes input for next hop

- Final quote object returned to user

The two-phase approach is intentional. The routing engine handles the combinatorial explosion of path finding (which paths exist, how to split). The Math Core handles precision arithmetic for each selected path using exact on-chain formulas.

Visual Intepretation of Smart-Water Flow:

7. DEX Adapter Pattern

The router core remains agnostic to specific DEX implementations. Each protocol implements a standard interface:

Supported Adapters:

- Raydium: AMM v4, CLMM, CPMM, Stable Swap

- Orca: V1, V2, Whirlpool

- Meteora DLMM: DLMM, DAMM, DAMM v2, Multi-token Stable

Standard Interface:

compute_amount_out(state, amount) → {output, fee, impact}This abstraction allows rapid integration of new DEX protocols without modifying the core routing logic.

8. Performance Characteristics

Key Metrics

- Quote Latency: <1ms

- On-chain Match: Exact

- State Freshness: Real-time

Component Comparison

| Component | CQ1 | Traditional Aggregators |

|---|---|---|

| Data Ingestion | Push (gRPC stream) | Poll (RPC requests) |

| State Storage | Binary buffers | JSON / cached objects |

| Pathfinding | Massively parallel | CPU sequential/threaded |

| Quote Precision | Exact curve replica | Estimated / approximated |

9. Conclusion

CQ1 is totally new approach towards DeFi. By combining massively parallel pathfinding with binary-native state management and real-time data ingestion, the engine operates closer to the physical limits of what's possible given network latency and memory bandwidth.

For traders moving significant size or operating in volatile conditions, the difference between a 200ms-stale estimate and a real-time exact quote is the difference between a successful trade and a failed transaction.

Performance metrics represent architectural design limits. Real-world performance depends on network conditions, validator proximity, and trade complexity. CQ1 is proprietary Carbium technology.